Handling real-time data efficiently has become a critical requirement for modern businesses. Apache Kafka use cases demonstrate how this open-source, distributed event streaming platform helps organizations process and manage data streams seamlessly.

While Kafka offers significant advantages, it’s important to determine when it’s the right fit and when other solutions might work better. This guide explores key Apache Kafka Use Cases, highlighting scenarios where it excels and instances where alternative options should be considered.

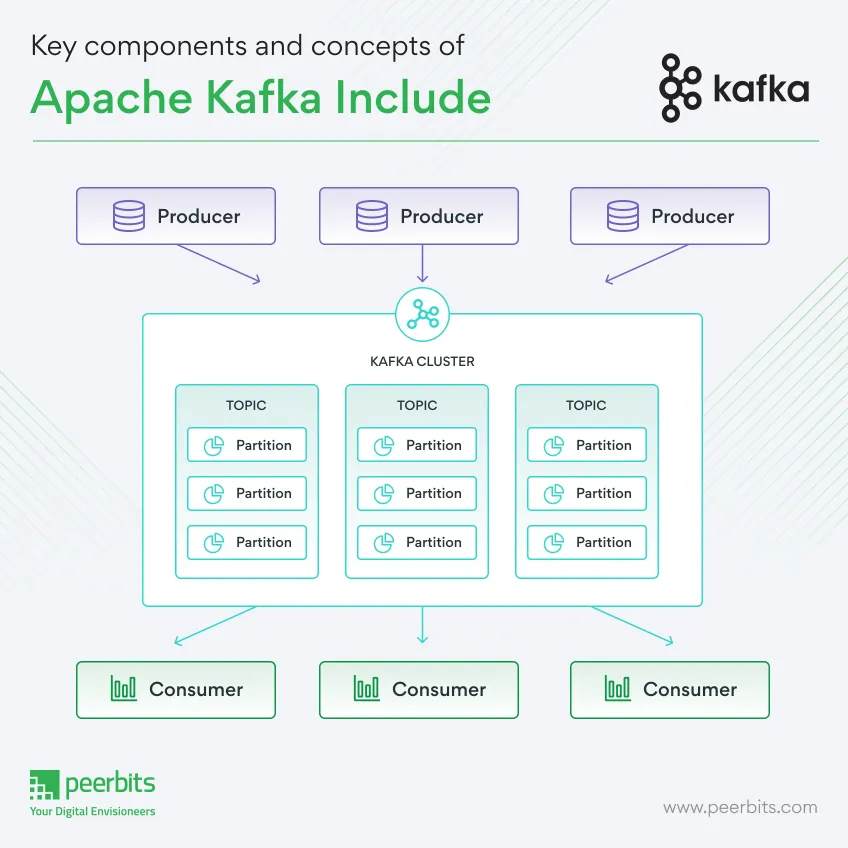

Key components and concepts of Apache Kafka include

Apache Kafka is an open-source distributed event streaming platform developed by the Apache Software Foundation. It is designed to handle large volumes of real-time data and facilitate the seamless, high-throughput, and fault-tolerant transmission of data streams across different applications and systems.

Kafka was originally created by LinkedIn and later open-sourced as part of the Apache project, becoming a fundamental tool for building real-time data pipelines and event-driven architectures.

Topics & partitions

Data streams in Kafka are organized into topics, which serve as logical channels for publishing and subscribing to data. Each topic can have multiple producers and consumers. Each topic is divided into partitions to enable parallel processing and distribution of data. Partitions are replicated across multiple brokers for fault tolerance.

Producers & consumers

Producers are responsible for sending data records to Kafka topics. They can be various data sources, applications, or systems that generate data. Consumers read and process data from Kafka topics. They can be applications, services, or systems that subscribe to one or more topics to receive real-time data updates.

Brokers

Kafka brokers form the core of the Kafka cluster. They store and manage data records, serving as the central communication point for producers and consumers. Kafka clusters can consist of multiple brokers for scalability and fault tolerance.

ZooKeeper

While Kafka has been moving towards removing its dependency on Apache ZooKeeper for metadata management, older versions still rely on ZooKeeper for cluster coordination and management.

Retention

Kafka can retain data for a configurable period, allowing consumers to replay historical data or enabling batch processing of data.

Streams and connect

Kafka offers Kafka Streams for stream processing applications and Kafka Connect for building connectors to integrate with external data sources and sinks.

Apache Kafka is widely used for various use cases, including real-time data streaming, log aggregation, event sourcing, data integration, complex event processing (CEP), change data capture (CDC), and more.

It provides strong durability guarantees and is known for its high throughput, low latency, and scalability, making it a popular choice for organizations dealing with large volumes of data and requiring real-time data processing and analysis.

Use cases for Apache Kafka

We will uncover how Apache Kafka serves as the backbone for various use cases, providing a reliable and scalable solution for handling data streams.

Whether you are looking to build a real-time data analytics platform, implement event-driven architectures, or enable IoT communication, Kafka offers a robust foundation to transform your data management strategies.

Real-time data streaming

Apache Kafka is the go-to solution when you require real-time data streaming at scale. It excels in scenarios where large volumes of data must be ingested, processed, and disseminated with minimal latency.

Industries such as finance, e-commerce, and telecommunications rely on Kafka to power applications that demand up-to-the-minute information.

Log aggregation

Kafka serves as a centralized repository for logs generated by diverse services and applications. This aggregation simplifies log analysis, debugging, and troubleshooting, making it a favorite choice in DevOps and system monitoring.

Event sourcing

In event-driven architectures, Kafka shines by maintaining a complete and ordered history of events.

This historical context is invaluable in domains like finance, healthcare, and e-commerce, where auditing, traceability, and compliance requirements are stringent.

Data integration

Kafka's versatility makes it an excellent choice for data integration across heterogeneous systems, databases, and applications.

It enables the seamless flow of data in complex microservices architectures, enhancing interoperability and reducing data silos.

Messaging

Kafka can be employed as a robust messaging system for real-time communication between applications.

This use case finds applications in chat applications, notifications, and managing the deluge of data generated by IoT ecosystems.

Batch data processing

Kafka's durability and data retention capabilities make it well-suited for batch data processing.

This proves beneficial when you need to reprocess data, backfill historical records, or maintain a complete data history.

Complex Event Processing (CEP)

Organizations dealing with high-volume, high-velocity data streams, such as financial institutions and network monitoring, leverage Kafka for complex event processing.

It enables the detection of intricate patterns and anomalies in real time, aiding fraud detection and situational awareness.

Change Data Capture (CDC)

Kafka's ability to capture and replicate changes made to databases in real-time positions it as a vital component for building data warehouses, data lakes, and analytics platforms.

It simplifies the process of data synchronization and keeps analytical systems up-to-date.

Read more: Everything you need to about Apache Kafka

When Not to Use Apache Kafka

While Apache Kafka is a powerful and versatile distributed event streaming platform, it's important to recognize that it may not always be the best fit for every data processing scenario. Understanding the limitations and scenarios where Apache Kafka might not be the optimal choice is crucial for making informed decisions when architecting your data infrastructure.

In this section, we'll explore situations and use cases where Apache Kafka may not be the most suitable solution, helping you determine when to consider alternative technologies or approaches.

Simple request-response communication

If your application predominantly relies on simple request-response communication and doesn't involve real-time streaming or event-driven patterns, traditional RESTful APIs or RPC mechanisms might be more straightforward and suitable.

Small-scale projects

For small-scale projects with limited data volume and velocity, setting up and managing Kafka clusters could be overly complex and resource-intensive. Simpler data integration tools or message queues may offer a more cost-effective solution.

High latency tolerance

If your application can tolerate higher latencies, other solutions may be easier to implement and maintain. Kafka's primary strength lies in low-latency, real-time data streaming, and may be over-engineered for use cases with more relaxed latency requirements.

Limited resources

Organizations lacking the necessary resources, whether human, hardware, or financial, to manage and maintain Kafka clusters might consider managed Kafka services or alternative solutions that require less overhead.

Monolithic applications

If your application architecture remains predominantly monolithic and does not embrace microservices or event-driven components, the benefits of Kafka's event streaming may be limited, and simpler communication mechanisms may suffice.

Lack of expertise

Implementing and maintaining Kafka effectively requires expertise. If your team lacks experience with Kafka or event-driven architectures, consider investing in training or consulting services to ensure successful adoption.

Companies using Apache Kafka

Many top companies, including Fortune 100 giants, rely on Apache Kafka for real-time data streaming, event-driven architecture, and large-scale data processing.

-

LinkedIn: Uses Kafka to track user activities, send notifications, and power real-time analytics.

-

Twitter: Processes millions of tweets per second for analytics, engagement tracking, and content ranking.

-

Netflix: Streams real-time logs, monitors user behavior, and optimizes content recommendations.

-

Adidas: Uses Kafka for inventory management, order processing, and real-time customer insights.

-

Cisco: Supports network security, real-time monitoring, and IoT data processing.

-

PayPal: Handles fraud detection, financial transactions, and secure payment processing in real-time.

Kafka helps these companies scale, process high-velocity data, and maintain seamless operations.

Conclusion

Apache Kafka is a reliable solution for real-time data streaming, event-driven architectures, and large-scale data integration. Understanding Apache Kafka use cases helps in determining whether it aligns with your business needs.

It excels in handling high-throughput data pipelines, but in some cases, alternative solutions might be more suitable. Projects that require simplicity, lower resource consumption, or different communication patterns may benefit from other approaches. Evaluating your project's scale and requirements will help in making the right decision.