Apache Kafka is an open-source distributed event streaming platform that facilitates real-time data processing. Originally developed at LinkedIn, Kafka is now used widely to handle large volumes of data from various sources.

Kafka has the ability to process vast amounts of data with low latency, it is critical for applications requiring real-time insights.

From message queues to data pipelines, Kafka simplifies how data is managed and transmitted across systems, making it highly effective for tasks like stream processing and event-driven architectures.

This blog provides businesses with a thorough Apache Kafka guide, focusing on its history, architecture, and application in real-time streaming and big data.

What is Apache Kafka?

Apache Kafka is a distributed event streaming platform that processes real-time data at scale. It lets businesses manage, store, and analyze data as it’s generated, making it essential for modern data systems. Kafka is designed to handle large volumes of data, ensuring smooth data flow across different platforms.

Kafka’s use case for real-time data streaming

Apache Kafka is designed to handle real-time data streaming, making it perfect for use cases like log aggregation, monitoring, and stream processing.

It lets businesses act on data as it arrives, providing insights from sources such as IoT devices, transaction logs, and customer interactions. Kafka processes data continuously, ensuring time-sensitive applications get the data they need without delay.

How Kafka supports event-driven architectures

Kafka is a core component of event-driven architectures, where systems respond to events as they happen. It separates producers and consumers of data, creating flexibility in system design.

Kafka’s structure helps businesses build scalable systems that process data streams independently, improving workflow efficiency and providing quick reactions to data changes.

Key differences between Kafka and traditional messaging systems

Kafka stands apart from traditional messaging systems like RabbitMQ and ActiveMQ. While these systems focus mainly on message queuing, Kafka stores data in a distributed log.

This design offers better scalability and message retention, letting multiple consumers read the same data at different times. Businesses can reprocess data as needed, which is critical when managing large volumes of real-time data.

The origin of Apache Kafka at LinkedIn

Apache Kafka was created at LinkedIn to handle the growing demands of real-time data processing. As user activity surged, traditional systems failed to keep up with the scale and speed required.

Why LinkedIn needed Kafka

LinkedIn needed a system to manage millions of real-time events, from news feeds to analytics. Existing tools lacked the capacity for reliable data delivery and quick processing at scale.

How Kafka addressed these challenges

Kafka introduced a distributed design that handled massive data streams efficiently. Its ability to retain messages also supported historical data reprocessing, solving LinkedIn’s data bottlenecks.

Kafka’s Journey Beyond LinkedIn

Open-sourced in 2011, Kafka has grown into a widely adopted event streaming platform, powering real-time applications across various industries globally.

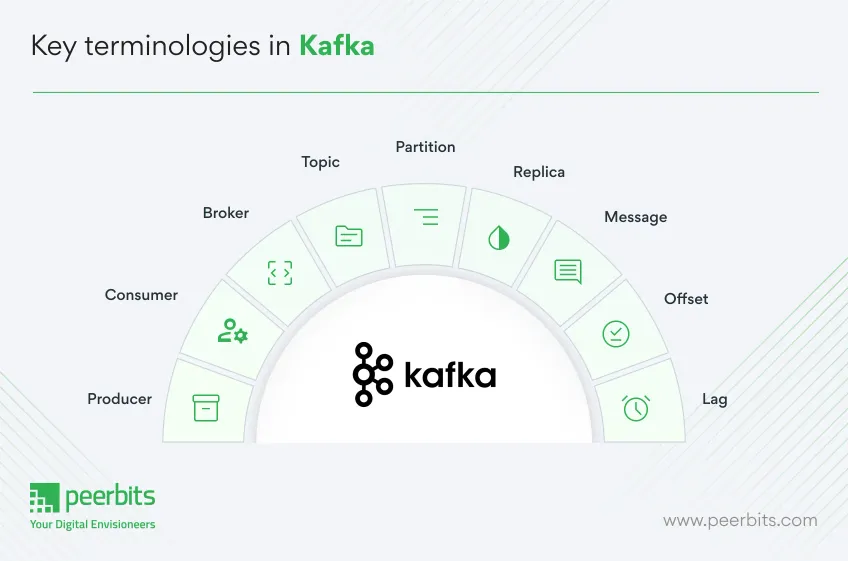

Core terminologies in Apache Kafka

It is important to grasp the core terminologies that define its architecture to understand how Apache Kafka functions.

These foundational concepts are central to Kafka's design, helping businesses create scalable, reliable, and efficient data systems. Here’s a detailed look at these terms and their significance.

Producer

A producer is the starting point of data in Kafka. It is responsible for creating and sending messages to Kafka topics. Producers can send data to multiple topics simultaneously, specifying the partition within a topic where the data should be stored.

This flexibility helps manage data distribution efficiently and ensures that messages are available for real-time consumption.

Consumer

Consumers retrieve and process messages from Kafka topics. They subscribe to one or more topics and fetch data based on offsets. Offsets lets consumers pick up exactly where they left off and make sure that no data is missed or duplicated.

Consumers can operate individually or within a group, supporting scalable message processing by dividing tasks across multiple consumers.

Broker

Kafka brokers are the servers in a Kafka cluster that store and distribute data. A cluster can have multiple brokers, each managing specific partitions of topics. Brokers coordinate with producers to receive data and with consumers to deliver it.

They help with efficient load balancing and redundancy by replicating partitions across the cluster.

Topic

A topic is the core channel in Kafka’s messaging system. Producers write messages to a topic, and consumers read messages from it.

Topics are further divided into partitions, making it easier to manage and process large volumes of data. This structure ensures that Kafka can handle high-throughput workloads effectively.

Partition

Partitions are subdivisions of topics in Kafka. Each partition is stored on a broker and processed separately. This design distributes data across multiple brokers in a cluster, helping Kafka manage large volumes efficiently.

Parallel processing is achieved as different consumers handle separate partitions simultaneously.

Replica

Replicas are copies of partitions that are stored on different brokers. They provide fault tolerance by ensuring that data is not lost if a broker fails.

Kafka uses leaders and followers among replicas, where the leader manages read and write requests while followers synchronize with the leader to maintain data consistency.

Message

A message is the smallest unit of data in Kafka. It consists of three components: a key, a value, and a timestamp.

The key helps determine the partition where the message will be stored, the value contains the actual data, and the timestamp records when the message was created.

Offset

Offsets are unique identifiers assigned to messages within a partition. They help Kafka track the position of each message, so consumers can read data in the correct order.

Offsets also let consumers resume reading from a specific point, maintaining data accuracy and reliability.

Lag

Lag represents the difference between the latest message produced to a topic and the last message consumed by a consumer. High lag indicates that a consumer is not keeping up with the incoming data, which can impact real-time processing.

Monitoring lag helps businesses maintain optimal performance and avoid delays in critical applications.

How does Apache Kafka work?

Apache Kafka operates through a well-defined flow of data, designed to ensure scalability, fault tolerance, and performance.

Here’s a step-by-step breakdown of how Kafka’s system works, from the producer sending data to the consumer processing it.

Overview of Kafka’s architecture

Kafka’s architecture consists of four key components: Producers, Topics, Brokers, and Consumers.

- Producers create and send data to Kafka topics.

- Topics act as channels where producers send data and consumers retrieve it.

- Brokers manage the storage of data in partitions and ensure reliable data delivery.

- Consumers read data from topics and process it.

How data flows through Kafka

- Producers send data: Producers publish messages to specific topics in Kafka. Each message can include a key, value, and timestamp.

- Messages are stored in partitions: Kafka divides topics into partitions for better scalability. Each partition can be hosted on different brokers to balance the load.

- Brokers manage data: Kafka brokers receive the messages, store them in partitions, and distribute them across multiple servers. The brokers also manage the replication of partitions for fault tolerance.

- Consumers retrieve data: Consumers subscribe to topics and fetch data. Each consumer group processes messages independently, enabling parallel processing and scalability.

How Kafka achieves scalability and fault tolerance

- Replication: Kafka replicates partitions across brokers to prevent data loss, with a leader handling requests and followers maintaining copies.

- Partitioning: Topics are divided into partitions, which supports parallel processing by consumers working on separate partitions.

- Retention: Kafka retains messages for a configurable period, supporting reprocessing or archiving without excess storage.

Kafka’s architecture and workflow provide a reliable, high-performance messaging system that scales with the demands of modern applications.

Read more: Apache Kafka use cases: When to use and when to avoid

Why choose Apache Kafka?

Apache Kafka is a solution for businesses that need to handle large-scale, real-time data flows. Its scalability, high performance, and durability make it a top choice for modern data architectures.

-

Multiple producers & consumers: Kafka accommodates multiple producers and consumers, providing scalability and flexibility. This setup helps businesses manage fluctuating data loads and diverse processing needs efficiently.

-

Disk-based retention: Kafka retains data on disk, making it available for future use, stream processing, and auditing. This feature keeps valuable data accessible even after it has been produced.

-

High performance: Kafka processes millions of messages per second and handle large volumes of real-time data without losing speed or efficiency.

-

Scalability: Kafka’s architecture supports horizontal scaling, where adding more brokers helps distribute the load without compromising performance. The system can grow alongside the business.

-

Durability & fault tolerance: Kafka replicates data across multiple brokers. In case of failures, the data remains intact and accessible, maintaining the system’s reliability.

-

Real-time processing: Kafka plays an important role in managing real-time data streams, making it ideal for constructing real-time data pipelines that support quick decision-making and operations.

This set of features positions Apache Kafka as a strong choice for businesses looking for reliable, scalable, and high-performance data infrastructure.

How Kafka stacks up against other messaging solutions

Apache Kafka stands out for its ability to handle large-scale, real-time data streams, offering unique advantages over other messaging systems and traditional databases.

Below is a comparison of Kafka with RabbitMQ, ActiveMQ, and traditional databases to highlight its strengths in scalability, durability, and performance for modern data architectures.

| Aspect | Kafka | RabbitMQ | ActiveMQ |

|---|---|---|---|

| Data streaming | Best suited for large-scale real-time data streaming and high-throughput. | Primarily designed for messaging with lower throughput, suited for traditional message queueing. | Handles messaging well but not optimized for high-volume, real-time data streams. |

| Scalability | Horizontal scaling with partitioning and replication. | Scaling can be challenging as it relies on vertical scaling. | Horizontal scalability is limited compared to Kafka. |

| Message durability | Replication and distributed architecture ensure data availability even in case of failures. | Relies on queues but does not have built-in partitioning or high-availability replication like Kafka. | Provides durability but less flexible in terms of high availability and scalability. |

| Real time processing | Designed for real-time processing, making it ideal for event-driven architectures. | Better suited for transactional message queues rather than real-time data pipelines. | Supports real-time messaging but lacks Kafka’s high throughput and scalability for real-time data streams. |

| Handling high volume | Handles millions of messages per second without compromising performance. | Struggles with high-volume message handling. | Struggles with handling high-volume data in the same way Kafka does. |

| Use case | Best for event streaming, large-scale data ingestion, and real-time analytics. | Suited for handling traditional messaging and request-response patterns. | Suited for point-to-point messaging but not optimal for high-volume, real-time applications. |

Kafka vs. traditional databases

When it comes to managing real-time data and large-scale message processing, the differences between Apache Kafka and traditional databases become quite apparent.

| Aspect | Kafka | Traditional databases |

|---|---|---|

| Primary use | Event streaming, real-time data processing, and large-scale data ingestion. | Data storage, retrieval, and management for structured data. |

| Data flow | Designed to handle real-time data streams and event-driven architectures. | Focuses on data transactions and relational storage. |

| Scalability | Scales horizontally with partitioning and replication for handling large data volumes. | Generally scales vertically with more storage and computing resources; scaling horizontally can be complex. |

| Real-time processing | Built for real-time data processing and event-driven use cases. | Not designed for real-time streaming or immediate data consumption. |

| Data storage | Data is stored temporarily, with configurable retention periods for future use or reprocessing. | Stores data permanently in tables and is optimized for retrieval. |

| Performance | Handles high-throughput and high-volume data streams, supporting millions of messages per second. | Optimized for data consistency and complex queries but struggles with handling high-volume, real-time data streams. |

| Fault tolerance | Built-in replication and partitioning ensure data availability and prevent data loss. | Fault tolerance depends on the database’s configuration (e.g., replication and backups). |

| Use case | Ideal for real-time analytics, event streaming, log aggregation, and data pipelines. | Best for transactional systems, data warehousing, and long-term structured data storage. |

Challenges and considerations when using Kafka

While Apache Kafka offers a solid solution for large-scale data streaming, businesses should consider the following challenges when implementing it:

- Complex setup: Setting up Kafka can be difficult, especially for newcomers. Proper configuration of brokers, topics, partitions, and replication is required to achieve optimal performance and reliability.

- Resource management: Kafka’s performance depends on sufficient hardware resources. If the system isn't properly sized to handle the expected workload, performance may slow down, and data loss could occur.

- Data replication and fault tolerance: Although Kafka offers fault tolerance through data replication, managing the increased resource consumption from this replication can be challenging. Large datasets add overhead and require more storage.

- Message ordering and processing delays: Kafka guarantees message order within a partition but not across all partitions. To handle message order and processing delays, effective partition management and consumer configuration are necessary.

- Partitioning strategy: Choosing the right number of partitions and how to distribute data among them is essential for performance. Too few partitions can waste resources, while too many can create unnecessary overhead.

- Consumer group management: Efficient message consumption is key to maintaining Kafka's performance. Proper management of consumer groups and load balancing ensures the system processes messages effectively without overloading individual consumers.

Kafka ecosystem and integrations

The Kafka ecosystem includes tools that expand its functionality and simplify integration with external systems.

- Kafka streams: A library for real-time stream processing, allowing direct data manipulation within Kafka.

- Kafka connect: Facilitates integration with external systems like databases and messaging platforms via pre-built connectors.

- Schema registry: Ensures consistency by managing schemas for Kafka messages, preventing data inconsistencies.

- KSQL: A SQL-like language for querying and processing Kafka streams in real-time.

Best practices for implementing Apache Kafka

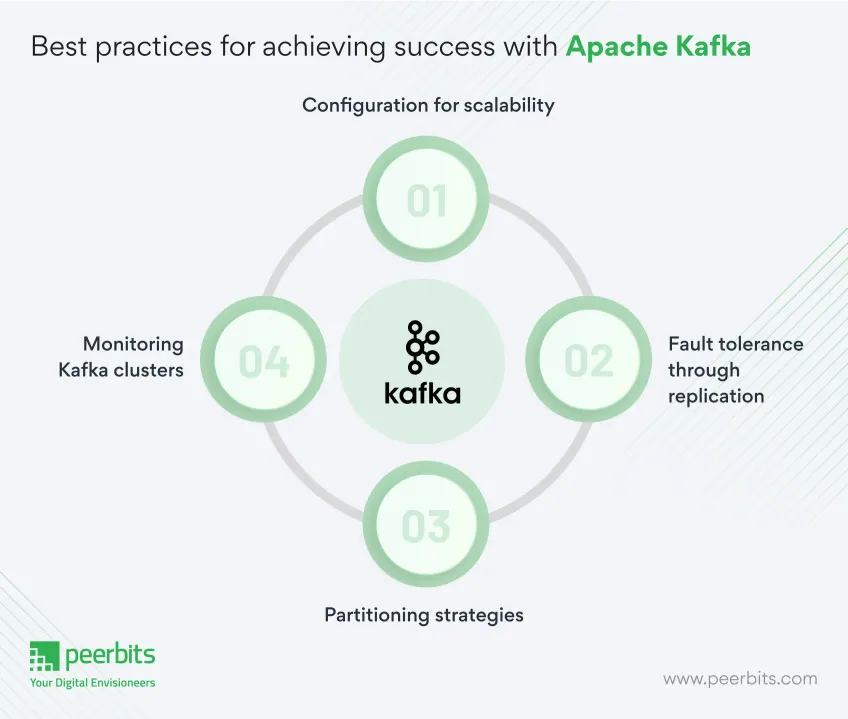

To make Apache Kafka work efficiently, it's important to follow certain practices during setup and maintenance. Here are some tips for a smooth Kafka implementation:

- Configuration for scalability: Set up Kafka to handle growing data needs by adding brokers and adjusting partitions as necessary.

- Fault tolerance through replication: Implement replication to maintain data availability and minimize the risk of data loss during system failures.

- Partitioning strategies: Determine the right number of partitions to evenly distribute the workload and optimize performance.

- Monitoring Kafka clusters: Use monitoring tools like Kafka Manager and Confluent Control Center to keep track of system performance and address any potential issues.

Conclusion

Apache Kafka is a powerful tool for businesses dealing with large-scale, real-time data. Its ability to handle high volumes of streaming data, provide scalability, and support fault tolerance makes it an ideal choice for modern data architectures.

Businesses can build efficient and real-time data pipelines by integrating Kafka into their infrastructure, supporting growth and operational efficiency.

Kafka's ecosystem provides the flexibility and tools needed to process large datasets and build event-driven applications, meeting a wide range of requirements.

At Peerbits, we specialize in helping businesses implement Apache Kafka to optimize their data infrastructure and drive impactful solutions.